I wanted to push data from Treasure Data into Azure SQL. The data was row based and roughly about 1 TB in size.

As I examined my options, there were quite a few, however, in the end, it turned out that the easy options were just not enough. I’ll walk through the options I considered and my thoughts on each.

Pushing from TD

- Push from Treasure Data using MS Sql Connector. The good part of this option is that it’s built into Treasure Data and supports things such as only push updates. As I looked into this option, I was hoping it would work as this would make my job a lot easier. However, as I pushed data it turned out that pushing data to Azure SQL continually timed out. So after contacting TD support (who are *very* responsive), I kept reducing the array size to a point where the data transfer was functional, but the data throughput was so poor, moving the amount of data I wanted was a non-starter. On to option 2.

- The second option I tried was also from the TD console. I pushed data from TD into Blob storage at Azure. This went fairly smoothly, but when I wanted to rename the file I was placing in Azure, I was not able to do so. On to option 3.

- The third option I tried was to write a script using the TD command line. This gave me all the flexibility I wanted for naming the file as well as adding parameters so that I could move other tables in the future. Since I was starting a TD job, all the built in monitoring and logging for TD also kicked in. Also, all the compression & security for transport just worked. Nice.

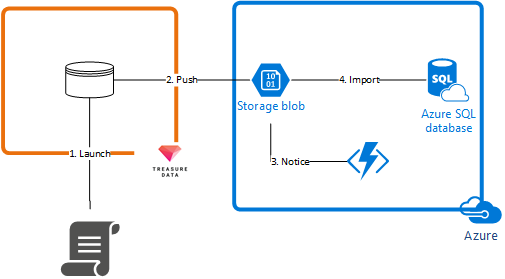

So, that was it pushing the data out of TD. Now to look at pulling the data in Azure SQL. In the architecture diagram, this covers steps 1 and 2.

Import into Azure SQL

- On the Azure side, I wanted to notice whenever a new file showed up in blob storage. This turned out to be a nice fit for Azure Functions. As I looked into Azure Functions, there were two versions. One is in GA now (v1) and the second in preview (v2). As I looked into them, I realized my requirements were pretty basic, so I took a chance on the preview version (v2). Turns out writing an Azure functions in C# is really easy. I wrote 2 functions, one to uncompress the incoming file and the second to load the .csv file into Azure SQL. This item covers steps 3 and 4 of the architecture diagram.

- Turns out there’s a new shiny on the horizon — Azure Data Factory (currently in preview). I suspect I’ll rewrite the Azure functions into Azure Data Factory as it moves from preview into GA. The main reasons in my mind are improved monitoring and alerting.